The GPTQ Algorithm: Enhancing OBQ for Large Language Models

Accurate Post-Training Quantization for Generative Pre-trained Transformers

Introduction

Quantization is a process in which infinite range of numbers are mapped to a grid of small discrete numbers. It is a technique used to reduce the precision of models. This can be achieved by representing the weights and activations of the models in low precision datatypes. These low precision datatypes include int8 or nf4 etc.

Optimal brain quantization

Before we understand the GPTQ algorithm, we need to understand the Optimal Brain Quantization technique. In short Optimal Brain Quantization is represented as OBQ. The logic of GPTQ is based on the OBQ framework.

So, in the quantization process, we want to iterate over every layer of the model, and we want to find the quantized representation of the weights of that particular layer. The quantized version of the weights is represented by Wq, where the original floating-point weights are represented as W. This method of layer wise quantization of the weights is known as the "Layer wise compression problem".

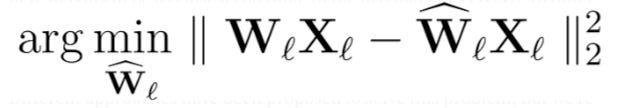

It is important to note that the Wq (quantized weights) must be as close as possible to the W (original floating-point weights). This is important to minimize the performance degradation as much as possible. The loss function for the above description can be represented as follows.

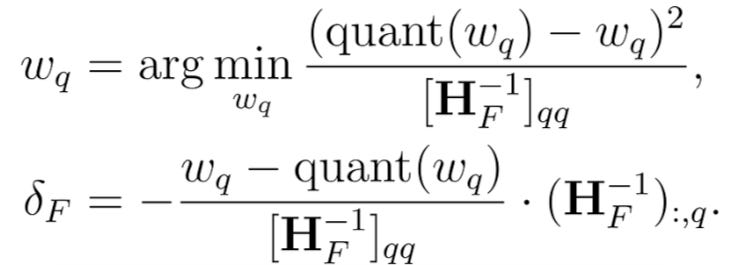

The OBQ method takes inspiration from the pruning technique. This pruning method was first introduced in the paper called "Optimal Brain Surgeon" also called OBS. This method is an approximation technique which used some formulae to select a particular weight 'w' and remove that weight. The loss incurred due to the removal of this weight is compensated by providing an update 'δ'. This update is used to adjust the set of the remaining weights that have not been quantised yet in the same layer.

In the above formulae, the weight to be quantized with is represented as quant(w) and the Hessian is represented as H.

So, in simple terms, OBQ can be divided into two parts:

Pick the easiest weight to be quantized and quantise it.

Compensate the quantization loss in the remaining non quantised weights.

There is one issue with this process, that is when there are outliers in the model weights, it can lead to large quantization errors. And we know that LLM models have a lot of outliers. These outliers are very systematic, and they are of a very high magnitude. More details of the outlier problem have been discussed in the previous section of this report. The outliers are usually quantised at the end of the OBQ process. When it comes to quantising the outliers at the end, what happens is that there are very few non-outlier-non-quantized weights lest to be adjusted for the loss in quantization which would lead to some of these weights to get pushed out of the quantization grid. To overcome this issue a simple method id followed which says that the outliers are to be quantized first as soon as they appear.

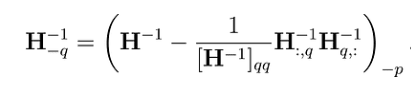

Another challenge of this OBQ method is that the whole process of picking layer by layer and then quantizing and updating the weights is a very compute heavy process. To avoid such high computation, the QBQ process uses an optimisation to calculate the new hessians as shown below.

GPTQ algorithm

The GPTQ algorithm is based on the OBQ method. There have been some significant improvements to the QBQ method in the GPTQ algorithm to help in the computation of very large language models.

The GPTQ algorithm consists of three steps:

Arbitrary Order insight

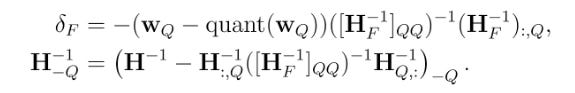

In the OBQ method, the weights are picked to be quantized based on the error which adds up. Whereas the GPTQ model has found that for large language models, the order of picking the weights does not matter and the result would remain significantly unchanged even when the model weights are picked for quantizing in the same order of the LLM model weights. This method speeds up the process since some calculations have to be done once for each column rather than calculating once for each weight element.

Lazy Batch Updates

Only Step 1 would not increase the quantization process speedup by a very significant amount since it requires to update a huge matrix and the computations to be done on each of the entry would be comparatively low. So, this leads to a problem which is memory bound. Hence GPU utilization would not be maximised. To solve this problem, lazy batch updates have been introduced.

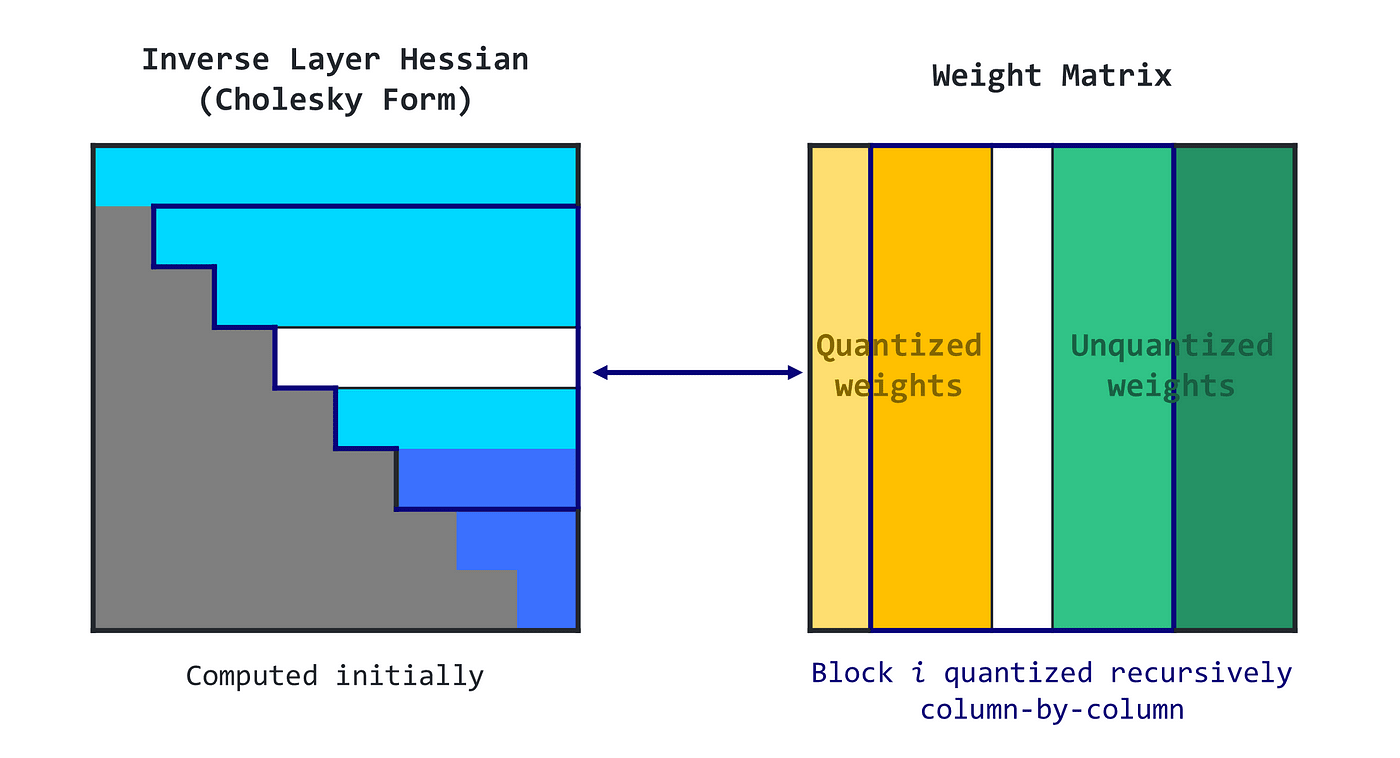

The idea is to exploit the fact that the final outcome of a particular column is influenced by the updates made on that column and not on the subsequent columns. Instead of processing one column at a time the algorithm can work on a batch of columns simultaneously.

Cholesky Reformulation

Though the GPTQ algorithm so far has addressed the scaling up of the quantization process for LLMs, numerical errors or inaccuracies can cause issues. To tackle this issue Cholesky decomposition has been used.

GPTQ algorithm has shown significant results in LLM quantization. The models were compressed using this method with very minimal quantization errors of the overall output and the speedup gain has been significant. The results of the code generation task have been shown in the results section of this report where we can see the speedup and the compression gains.